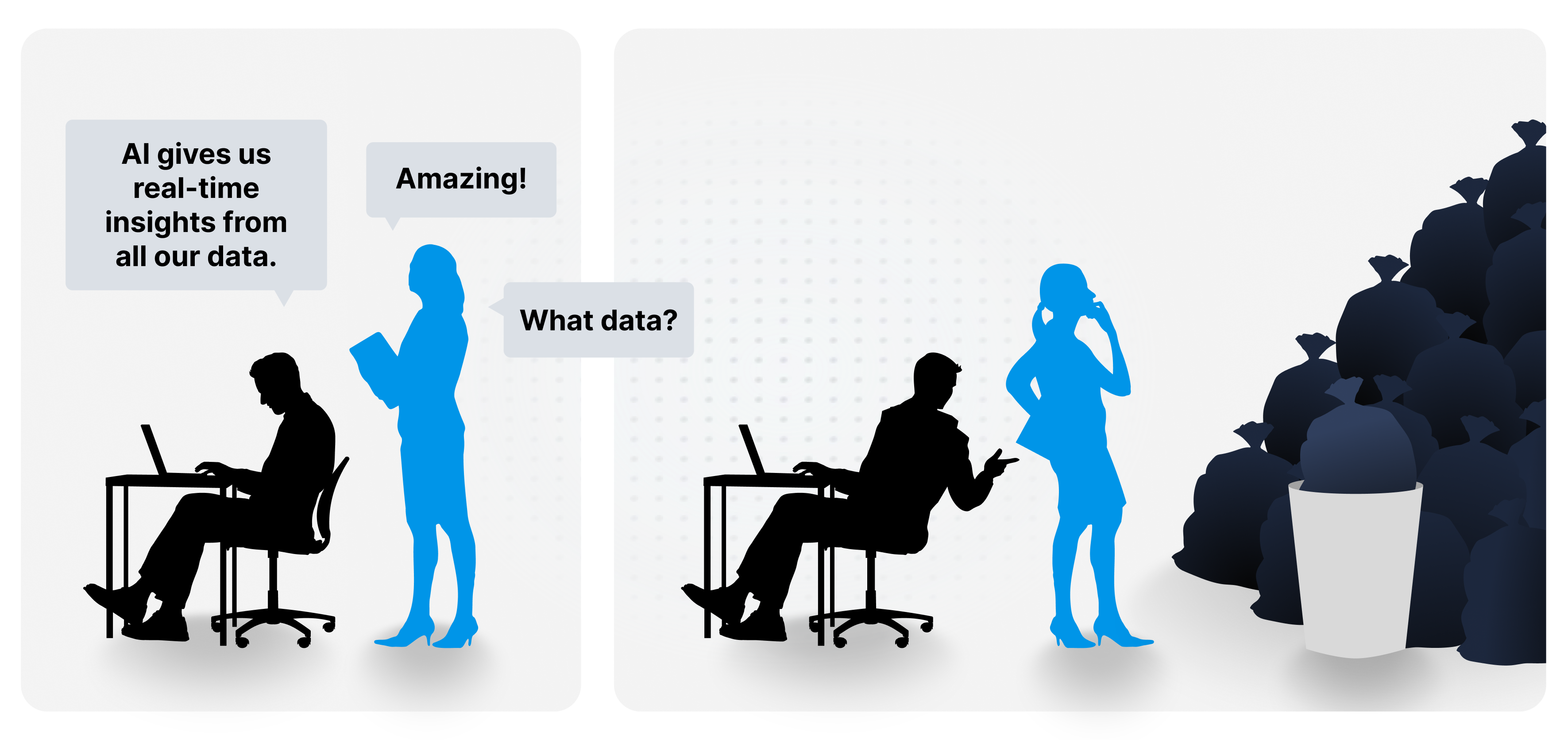

Data Garbage In, AI Garbage Out: Why Governance Matters More Than Ever

The adage "Garbage In, Garbage Out" (GIGO) has been a foundational concept in computing since the 1950s. It underscores a simple truth: the quality of output is determined by the quality of input. In today's context, as artificial intelligence (AI) becomes integral to business operations, this principle is more relevant than ever.

AI systems, regardless of their sophistication, rely heavily on the data they are fed. If the input data is flawed, whether outdated, inconsistent, or incomplete, the AI's output will reflect these deficiencies, potentially leading to misguided decisions.

AI is integrated into dashboards, customer service bots, analytics tools, and decision-making processes. However, there is a significant issue: AI systems do not understand the concept of truth. They cannot recognize when data is fragmented, duplicated, outdated, or misused. Instead, they learn and make predictions solely based on the patterns they are given.

Businesses rely more on data, but trust it less

According to Salesforce’s 2024 State of Data and Analytics report,76% of business leaders believe that AI has made being data-driven more important than ever. However, only 36% of these leaders trust the accuracy of their company's data (a decrease of 27% in just one year).

That’s a dangerous combination: growing reliance on data and AI, coupled with declining trust in the data that underpins them. This creates an illusion of accuracy, with automated results that appear polished and intelligent but are founded on shaky ground.

What is data governance, and why does AI depend on it?

When data is messy, AI does not stop or warn you; it simply processes faster, amplifying flawed assumptions with confidence.

AI technologies are designed to process vast amounts of data rapidly, identifying patterns and generating insights. However, without proper data governance, these systems can inadvertently amplify existing data issues. For instance, if an AI model is trained on biased or incomplete data, its predictions and recommendations will likely perpetuate those biases or gaps.

Real-world examples abound where AI systems have produced misleading results due to poor data quality. Such instances highlight the critical need for organizations to prioritize data integrity before deploying AI solutions.

The effects of poor data quality may not be immediately apparent. For example, a financial report might indicate strong quarterly performance, but if it relies on outdated revenue definitions, it could misrepresent the actual figures. Similarly, a churn model may fail to identify early warning signs due to inconsistent labeling across different regions. Additionally, a supply chain forecast could lead to over-ordering inventory if it is based on duplicated entries from an unconnected system.

Building trust through data governance

The problem here isn’t the AI itself. It’s what we’re feeding into it. And that brings us to a core, often overlooked discipline: data governance.

To get the full potential of AI, organizations must first establish robust data governance frameworks. Implementing these practices not only improves data quality but also lays the foundation for trustworthy AI applications.

This involves:

- Define and maintain a shared business glossary

- Track the full lineage of key metrics and datasets

- See exactly where data comes from, how it’s used, and who owns it

- Document the rules and logic behind KPIs and calculations

Integrating AI Governance for Responsible Deployment

Beyond data governance, organizations must also focus on AI governance to ensure responsible and ethical AI deployment. Key components include:

- Model Documentation: Keeping detailed records of AI models, including their purpose, data sources, and decision-making processes.

- Risk Assessments: Evaluating potential risks associated with AI applications, ensuring they align with regulatory standards.

- Transparency Measures: Making AI operations understandable to stakeholders, fostering trust and accountability.

Prevent GIGO and Build Trustworthy AI with Dawiso

AI produces results based on the data provided. And the more confident the output appears, the more dangerous it can be.

Organizations embracing AI must first ensure their data is clean, governed, and understood. With Dawiso, you gain the structure and visibility needed to prevent GIGO, reduce AI risk, and build a foundation of trust.

.png)