Agent Systems and Natural Language Query: The Two Major Directions Transforming AI and Data Management

The landscape of artificial intelligence and data management is evolving rapidly, with two distinct directions emerging as dominant trends. While natural language query systems and conversational analytics introduced a revolutionary way for users to interact with their data platforms, agent systems are now taking center stage as the next frontier in AI-driven data operations. Understanding these two major directions in AI is crucial for organizations looking to modernize their data infrastructure and maximize the value of their information assets.

Understanding the Two Major Directions in AI for Data

The transformation of how organizations interact with and manage their data has crystallized into two major directions in AI. These approaches represent fundamentally different philosophies about the role of artificial intelligence in data operations, yet both rely on sophisticated metadata management to function effectively.

1. Natural Language Query and Chat With My Data: The First Wave of AI Data Interaction

Natural language query systems, commonly known as chat with my data or conversational analytics platforms, emerged as the first major direction in AI for data management. These systems enable users to interact with data using plain everyday language rather than complex SQL queries or technical interfaces. Instead of writing code or navigating through multiple dashboards, users can simply ask questions like "How much have I sold this quarter?" or "Do we have data on customer retention rates?"

Vendors use various terminology for these capabilities, including Natural Language Query (NLQ), used by platforms like ThoughtSpot, Tableau, and Power BI. Tableau specifically brands this capability as "Ask Data," while other vendors refer to it as conversational analytics, AI-powered data chat, or search-driven analytics.

This approach to AI and data interaction gained significant traction because it democratized data access. Business users who lacked technical expertise could finally engage directly with their data platforms without depending on data analysts or engineers for every question. Natural language query systems serve as a new frontend layer that translates natural language into database queries, retrieves the relevant information, and presents it in an understandable format.

The implementation of chat with my data systems proved relatively straightforward for many organizations. These systems primarily focus on data retrieval and basic analysis, transforming natural human language into language a database can understand. However, while natural language query systems solved the problem of data accessibility, they still required human users to formulate questions and interpret results.

2. Agent Systems: The Next Evolution in AI Data Management

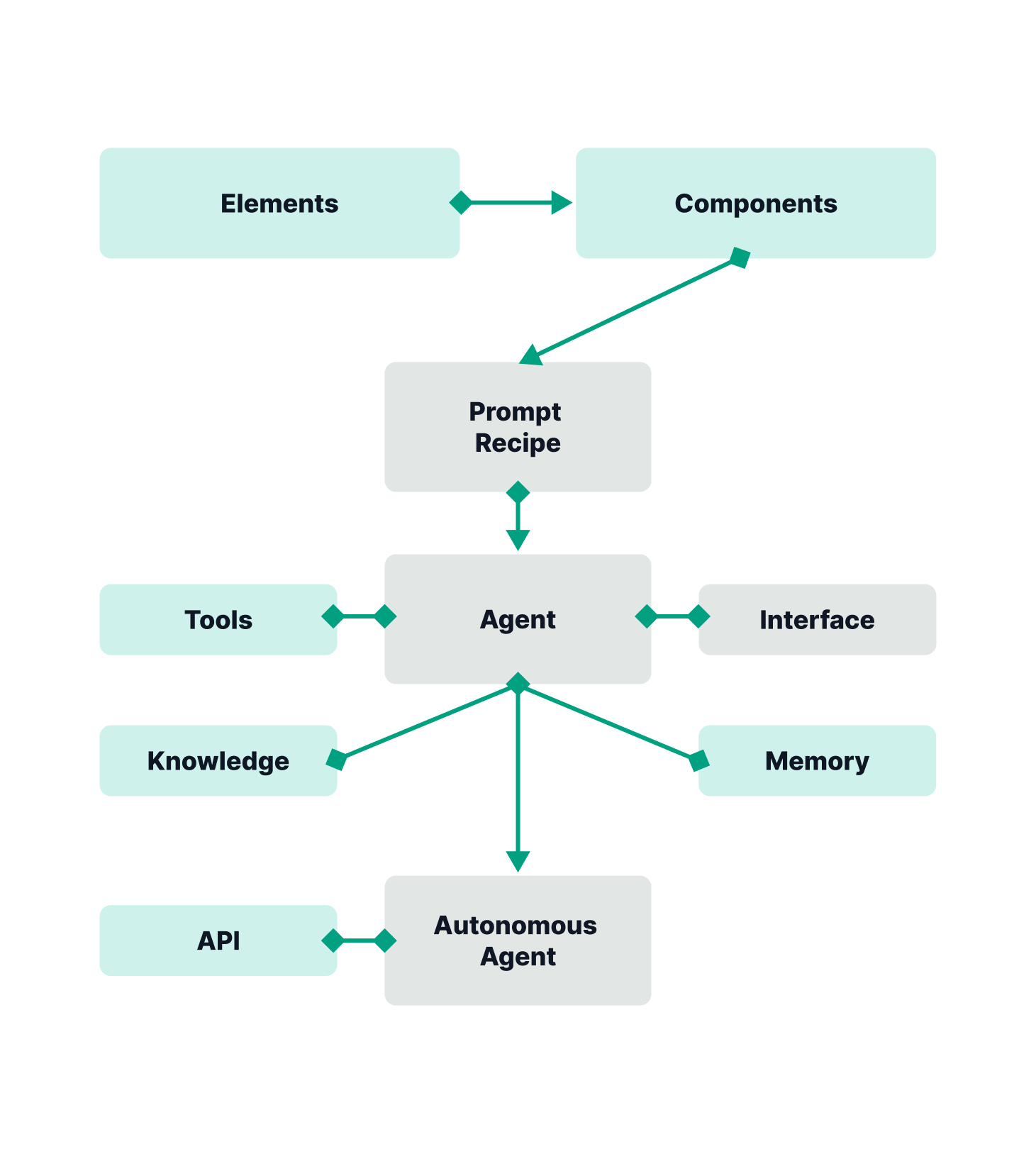

Agent systems represent the second and more advanced direction in AI for data operations. Unlike conversational analytics platforms that wait for user queries, agent systems proactively perform tasks and complete entire workflows autonomously. This fundamental shift moves AI from a reactive question-answering tool to a proactive digital worker capable of executing complex data operations.

The distinction between these two major directions in AI becomes clear when examining their operational models. While natural language query systems respond to questions, agent systems receive high-level instructions and then independently determine the steps needed to complete entire projects. An agent system might receive a task like "Create a weekly sales performance dashboard" and then autonomously identify required data sources, build data pipelines, perform transformations, and generate the visualization without further human intervention.

Major technology companies are investing heavily in agent systems. Microsoft, for example, has developed the Fabric Data Agent, while Snowflake introduced Snowflake Intelligence, a conversational AI experience that allows business users to interact with enterprise data using natural language while maintaining security and governance frameworks. Keboola and other platforms have introduced AI data engineers that can receive tasks such as building data pipelines, managing data transformations, and generating complete reports autonomously.

How Agent Systems Transform Data Operations

Agent systems fundamentally change the nature of data work by automating tasks that traditionally required skilled data engineers and analysts. Understanding how agent systems operate provides insight into why they represent such a significant advancement in AI and data management.

The Workflow of Agent Systems in Data Engineering

The operational model of agent systems mirrors the way human data engineering teams collaborate. A data engineer might traditionally spend hours or days manually building a data pipeline, writing transformation logic, testing the workflow, and creating visualizations. Agent systems can complete these same tasks in minutes, following a structured workflow that leverages multiple specialized agents.

Consider a typical scenario where a data engineer launches multiple agents to complete a comprehensive data project. The first agent receives the high-level instruction: "I need to move this sales data from our CRM to our analytics warehouse." This agent understands the requirement and identifies the source and destination systems.

A second agent then receives the task of building the actual data pipeline. This agent designs the extraction process, determines necessary data transformations such as cleaning null values and standardizing formats, and configures the loading mechanism to store the processed data in the target location. The agent system autonomously writes the code, establishes the connections, and implements error handling.

A third agent might then take the transformed data and create a Power BI report that displays specific metrics requested by stakeholders. This agent understands visualization best practices, selects appropriate chart types, and configures the dashboard for optimal user experience. All of this happens with minimal human intervention beyond the initial task assignment.

The Role of Metadata in Both Natural Language Query and Agent Systems

Both conversational analytics platforms and agent systems depend critically on comprehensive metadata and semantic layers to function effectively. Metadata serves as the foundation that allows these AI systems to understand what data exists, where it resides, how it relates to other data, and what transformations are appropriate.

For agent systems, metadata is even more critical than for natural language query systems. When an agent needs to build a data pipeline, it must access metadata to understand data schemas, identify relationships between tables, recognize data quality issues, and determine appropriate transformation logic. Without rich metadata, agent systems would be unable to make informed decisions about data operations.

Tools like Dawiso support both major directions in AI by providing the metadata infrastructure these systems require. Whether an organization is implementing natural language query capabilities or more advanced agent systems, robust metadata management enables the AI to understand the data landscape and operate effectively.

Natural Language Query Versus Agent Systems: Comparing the Two Directions

While both approaches represent important directions in AI for data management, they serve different purposes and offer distinct advantages. Organizations need to understand these differences to make informed decisions about their AI strategy.

Complexity and Implementation Considerations

Natural language query systems are generally easier to implement and require less sophisticated AI capabilities, primarily needing natural language processing to interpret user questions, query generation to retrieve data, and presentation logic to format results. The technical barrier to entry is relatively low, which explains why chat with my data systems gained widespread adoption quickly.

Agent systems require significantly more advanced AI capabilities. These systems need reasoning abilities to break down complex tasks into steps, decision-making logic to choose appropriate approaches, and integration capabilities to work with multiple data tools and platforms. The implementation of agent systems is more complex, but the potential value is correspondingly higher.

Autonomy and Human Involvement

The level of autonomy represents a fundamental difference between these two major directions in AI. Conversational analytics platforms remain dependent on continuous human input, requiring users to formulate questions and interpret responses. These systems augment human decision-making but do not replace the need for human analysis and action.

Agent systems are designed to automate parts of a workflow, but their level of autonomy can vary. In many cases, they still require human approval or input at key steps to ensure accuracy and compliance. Rather than fully replacing human oversight, they act as intelligent assistants that streamline routine operations and support data teams in focusing on higher-level strategy and decision-making.

Use Cases and Applications

Natural language query systems excel at ad hoc analysis, exploratory data investigation, and providing quick answers to business questions. They serve business users who need occasional data access and simple analytics. These systems democratize data access across an organization by allowing anyone to query data using everyday language.

Agent systems are better suited for repetitive data operations, complex multi-step workflows, and situations requiring integration across multiple data platforms. They serve as virtual data engineers that can handle substantial portions of the data pipeline development and maintenance workload. Agent systems are particularly valuable for organizations with high volumes of data operations and limited data engineering resources.

The Current State and Future of Agent Systems

Agent systems represent the present and near-future focus for AI in data management. Understanding where this technology stands today and where it's heading helps organizations prepare for the evolving landscape.

Adoption Trends in Agent Systems

The shift toward agent systems reflects broader trends in AI development, with organizations implementing natural language querying effectively seeing significant reductions in time-to-insight and increases in active data users. After the initial excitement around chat with my data systems and conversational analytics, organizations discovered that while these tools improved data accessibility, they didn't fundamentally reduce the workload on data teams. Questions still needed to be answered, pipelines still needed to be built, and reports still needed to be created.

Agent systems address this limitation by actually performing the work rather than simply facilitating access to information. This capability explains why agent systems have become the focal point for AI and data innovation in the current year and are expected to dominate the conversation in the coming year as well.

Major data platforms are racing to incorporate agent capabilities, with Microsoft introducing Researcher and Analyst reasoning agents in Microsoft 365 Copilot, and Snowflake launching Intelligence for conversational AI experiences. These offerings are still maturing, but early implementations demonstrate impressive capabilities.

Challenges and Considerations for Agent Systems

Despite their promise, agent systems face several challenges that organizations must consider. Trust and verification remain significant concerns. When a human data engineer builds a pipeline, other team members can review the code and logic. When an agent system builds the same pipeline autonomously, organizations need mechanisms to verify the work and ensure it meets quality standards.

Data governance becomes more complex with agent systems. These systems need appropriate permissions to access sensitive data, make changes to data infrastructure, and deploy new pipelines. Organizations must develop governance frameworks that allow agent systems to operate efficiently while maintaining security and compliance.

The learning curve for working with agent systems differs from traditional data tools. Data teams need to learn how to effectively task and supervise agent systems, provide appropriate context for tasks, and integrate agent output into broader data strategies. This represents a shift in required skills for data professionals.

The Convergence of Natural Language Query and Agent Systems

While this discussion has treated chat with my data systems and agent systems as distinct directions in AI, the reality is more nuanced. These approaches are beginning to converge as technology advances.

Hybrid Approaches to AI and Data

Some emerging platforms combine elements of both conversational analytics and agent systems, with users starting with a conversational query about data and the system responding by not only answering the question but also offering to build a pipeline or create a dashboard. This hybrid approach provides the accessibility of natural language query systems with the productivity benefits of agent systems.

Microsoft's Fabric Data Agent and Snowflake Intelligence, for example, incorporate aspects of both approaches, functioning as agents that can find and process data autonomously while also including conversational interfaces. This flexibility allows different users to engage with the AI in ways that match their needs and comfort levels.

The convergence of these two major directions in AI for data management suggests that the future may not involve choosing between them but rather implementing platforms that support both interaction models. Organizations benefit from having natural language query capabilities for ad hoc questions while also leveraging agent capabilities for ongoing operations.

Conclusion: Embracing Both Directions in AI for Data

The evolution of AI in data management has produced two major directions that organizations must understand and navigate. Natural language query systems and conversational analytics brought natural language interfaces and improved accessibility to data platforms, democratizing data access across organizations. These systems remain valuable for ad hoc analysis and exploration, providing a user-friendly way to interact with data through everyday language.

Agent systems represent the next frontier, moving beyond answering questions to actually performing work. These systems can build data pipelines, manage transformations, generate reports, and complete complex workflows with minimal human intervention. As technology companies like Microsoft introduce reasoning agents and other vendors invest heavily in agent capabilities, it's clear that agent systems will dominate the conversation around AI and data management in the coming years.

Both chat with my data systems and agent systems require strong metadata infrastructure and semantic layers to function effectively. Organizations that build this foundation position themselves to succeed with both approaches. Tools like Dawiso support both major directions in AI by providing the metadata management capabilities these systems need.

The future likely involves not choosing between these two major directions in AI but rather implementing solutions that support both natural language query and agent capabilities. Organizations will benefit from having conversational analytics interfaces for exploration and questions while also leveraging agent systems for automation and efficiency. The most successful data organizations will be those that thoughtfully integrate both approaches, using each where it provides the most value.

As agent systems mature and become more widely adopted, they will transform how data teams work. Rather than replacing human expertise, agent systems will augment it, handling routine tasks and allowing data professionals to focus on strategy, innovation, and complex problem-solving. Organizations that prepare now for this shift by investing in metadata management, building data quality programs, and developing team capabilities will be best positioned to thrive in an AI-driven data future.

The journey from natural language query and conversational analytics to sophisticated agent systems represents a fundamental shift in the relationship between AI and data operations. Organizations that understand both major directions in AI and strategically implement these technologies will gain significant competitive advantages through faster insights, more efficient operations, and better data-driven decision-making.

.png)